Sidebar

Table of Contents

MD-Workbench

The MD-Workbench benchmark is an MPI-parallel benchmark to measure metadata (together with small file) performance. It aims to simulate actual user activities on a file system such as compilation. In contrast to other metadata benchmarks, it produces access patterns that are not easily cacheable and optimizable by existing (parallel) file systems. For example, it results in much less performance than mdtest (10k IOPS vs. unrealistic 1M IOPS with mdtest observed on our systems). See below an example for a single local HDD that shows how well file systems can cache “batched” creates/deletes/lookups.

The pattern is derived from an access pattern from the Parabench benchmark that has been used at DKRZ for acceptance testing.

Usage

Example Output

An example when running the program with a single process is given in the following.

The command line arguments are printed up to Line 15. Then on Line 17 the arguments for the interface plugin (here POSIX) is printed, specifying the root directory. Starting with Line 19, the results for the three phases are printed, first the aggregated result for all processes are given. Then the individual results are provided (these are obtained by stopping the timer before the MPI barrier).

It can be seen that 26,000 objs/s with a performance of 99.1 MiB/s are created during the precreation phase. During the benchmark the rate is slightly reduced to 15,000 objs/s, in fact, this are the number of objects for each a read, write and delete is performed. This leads to a combined read/write performance of 114 MiB/s. According to the repeats parameter, the benchmark phase is repeated 5 times. It can be seen that in this case, performance increases after the first repeat.

MD-Workbench total objects: 80000 (version: 8049faa@master) time: 2016-12-14 17:01:36 offset=1 interface=posix obj-per-proc=1000 precreate-per-set=3000 data-sets=10 lim-free-mem=1000 lim-free-mem-phase=0 object-size=3900 iterations=5 start-index=0 run-precreate run-benchmark run-cleanup process-reports # line 16 root-dir=/mnt/test/out # line 18 precreate 1.1s 10 dset 30000 obj 8.878 dset/s 26634.7 obj/s 99.1 Mib/s (0 errs) 0: precreate 1.1s 10 dset 30000 obj 8.878 dset/s 26634.8 obj/s 99.1 Mib/s (0 errs) benchmark 0.7s 10000 obj 15320.1 obj/s 114.0 Mib/s (0 errs) 0: benchmark 0.7s 10000 obj 15320.1 obj/s 114.0 Mib/s (0 errs) benchmark 0.4s 10000 obj 22744.1 obj/s 169.2 Mib/s (0 errs) 0: benchmark 0.4s 10000 obj 22744.2 obj/s 169.2 Mib/s (0 errs) benchmark 0.4s 10000 obj 22561.8 obj/s 167.8 Mib/s (0 errs) 0: benchmark 0.4s 10000 obj 22561.9 obj/s 167.8 Mib/s (0 errs) benchmark 0.4s 10000 obj 22754.7 obj/s 169.3 Mib/s (0 errs) 0: benchmark 0.4s 10000 obj 22754.8 obj/s 169.3 Mib/s (0 errs) benchmark 0.4s 10000 obj 23369.9 obj/s 173.8 Mib/s (0 errs) 0: benchmark 0.4s 10000 obj 23370.0 obj/s 173.8 Mib/s (0 errs) cleanup 0.3s 30000 obj 10 dset 90796.6 obj/s 30.266 dset/s (0 errs) 0: cleanup 0.3s 30000 obj 10 dset 90797.2 obj/s 30.266 dset/s (0 errs) Total runtime: 4s time: 2016-12-14 17:01:43

Example study

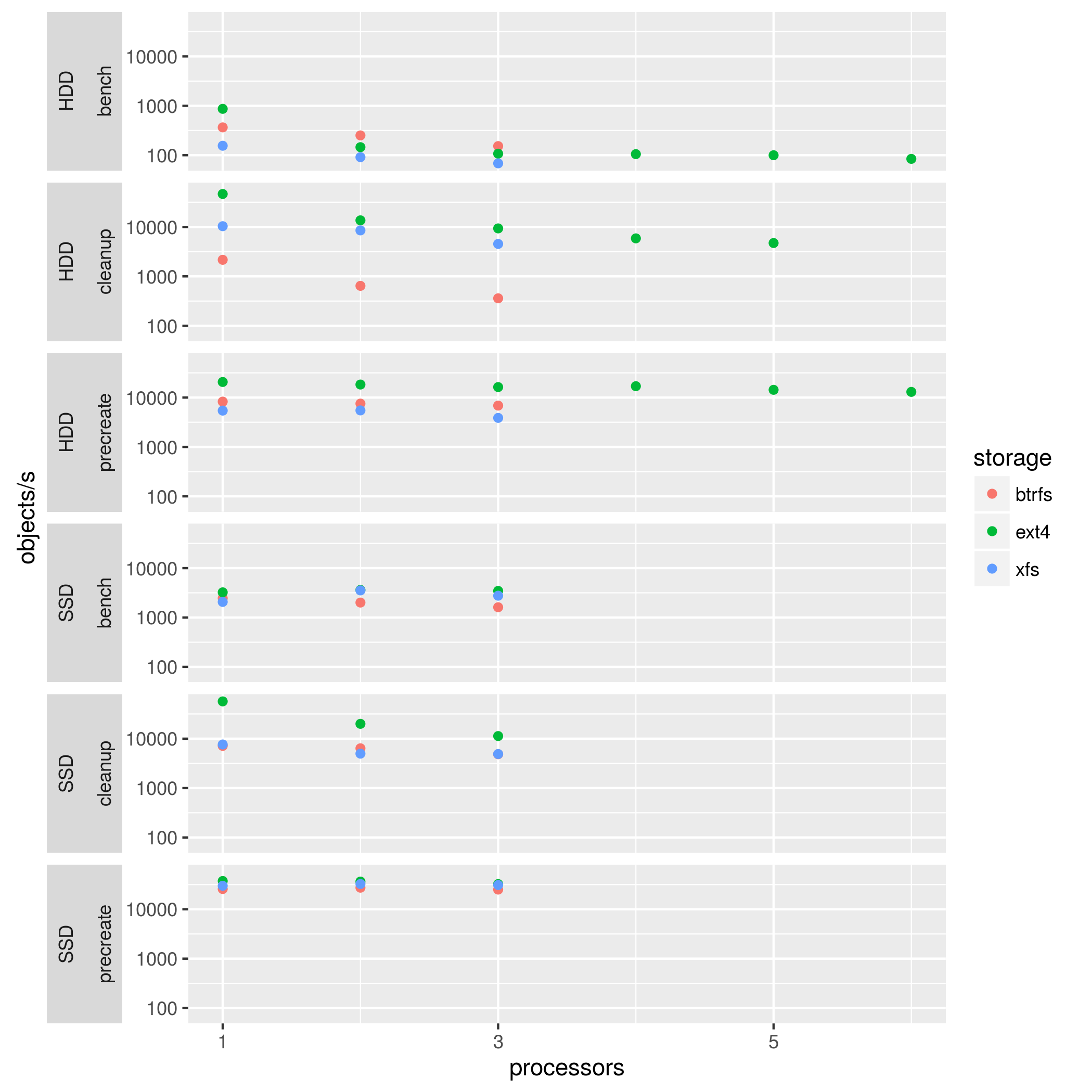

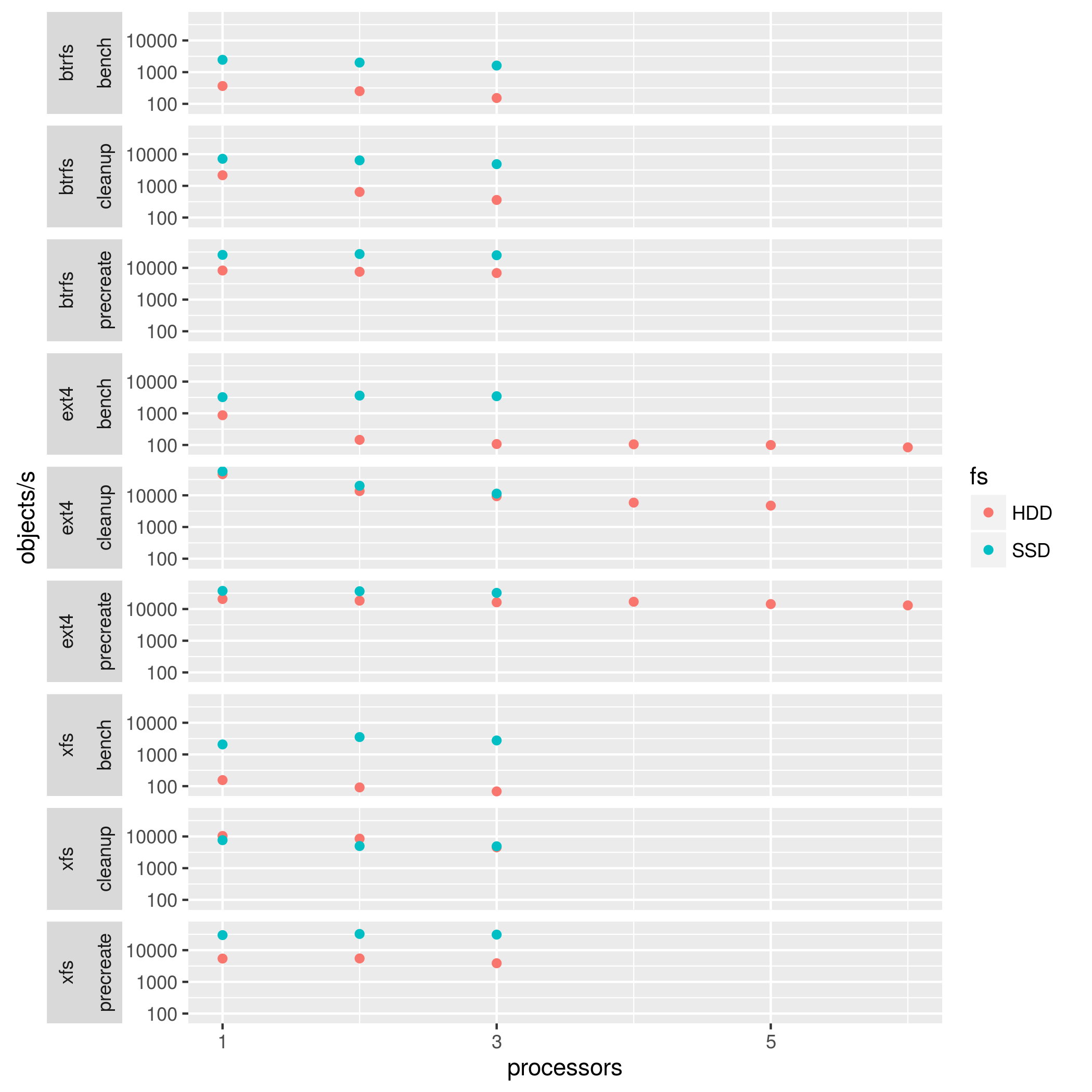

An evaluation of the three file systems BTRFS, XFS and EXT4 has been conducted for an HDD and SSD on a single node machine. The file system is created newly for the test on a fixed partition on the respective block device.

System description

- Operating system: CentOS 7

- Kernel: 3.10.0-327.36.3.el7.x86\_64 x86\_64 GNU/Linux

- CPU: Intel(R) Xeon(R) CPU E31275 @ 3.40GHz (8 cores)

- Memory: 16 GByte

- Block devices:

- SSD: INTEL SA2CW160G3

- HDD: WDC WD20EARS-07M

Results

The following settings have been used:

- Pre-created objects per dataset: 10,000 / number of processes

- Datasets: 50

- Iterations: 1000 for EXT4, 200 for the others (performance is much lower)

- Free memory: 1000

- Process count: 1-5 for EXT4, 1-3 for the others

The following graphs show the results for the three phases.

It can be seen that precreate and cleanup does not degrade much with the increase in processor count and is above the hardware capabilities (7 ms seek time for the HDD). Thanks to the optimizations in the file systems, the SSD and HDD behave similarly for create and cleanup. However, the benchmark phase reveals the hardware features across all file systems.

Details and measurement results are provided in the repository: https://github.com/JulianKunkel/md-workbench-io-results/

More details are given in this report.