Sidebar

Table of Contents

Next Generation Interfaces

The efficient, convenient, and robust execution of data-driven workflows and enhanced data management are key for productivity in computer-aided Research, Development and Engineering (RD&E). Still, the storage stack is based on low-level POSIX I/O (or objects in cloud storage). The forum would bring together vendors, storage experts, and users to discuss key features of alternative APIs and aims to establish governance strategies.

We are exploring to establish an open, community-driven, next-generation data-driven interface in a similar fashion to the existing forums. The envisioned coarse-grained API aims to overcome current obstacles for highly parallel workflows but would be beneficial also in the domain of big data and even desktop PC. It bears the opportunity to create a new ecosystem.

The envisioned API abstracts storage and data-flow computation with the clear goal of a separation of concern between the definition of tasks and the execution on a specific hardware. Additionally, it offers means to express workflow execution and lifecycle management. This allows smart schedulers to dispatch compute and I/O across the available hardware in an efficient manner. Since the user shall not care about data and compute locations, we coin the term liquid computing which extends stream processing. We aim to support the development of a prototype on top of existing software technology (e.g. NetCDF, HDF5, iRODS, libfabric, parallel file systems, and NoSQL solutions) but remain agnostic to the specific solution and not compete with these solutions. The final component is how to enable vendors to do differentiating optimizations without violating the common interface.

Main features of the resulting prototype could be:

- Smart hardware and software components

- Storage and compute are covered together

- User metadata and workflows as first-class citizens

- Self-aware instead of unconscious

- Improving over time (self-learning, hardware upgrades)

What we mean by this, we explain on use cases.

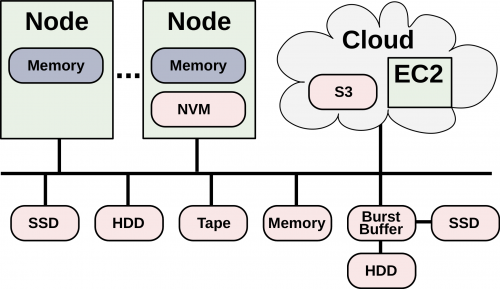

For example, consider a heterogeneous storage landscape:

The system shall make placement decisions, partial replication of data depending on the availability and characteristics of the storage, but also considering the usage patterns, particularly of the workflows. Thus, all available storage shall be used concurrently, storing and using data where it is best suited. In contrast, existing systems rely on data migration and policies.

Standardization

We are in the process to establish the NGI Forum that curates the development of the APIs. The process will share the idea with the successful MPI Forum. The approach is sketched in the figure below; members are experts from domain science, industry and data centers and form the bodies. The activity is led by the elected steering board. Topic-specific workgroups and committees develop the standard (data model and APIs) driven by relevant use-cases encompassing the span from workflows to code snippets. Ultimately, we will support the creation of a reference implementation based on state-of-the-art technology that demonstrates the approach on the use-cases. We are aware that this endeavor is challenging; therefore, we don't expect that NGI 1.0 will include all features in the final version.

We expect that vendors and researchers will embrace the open ecosystem similarly to MPI and explore and contribute to the forum and its development.

Presentations

Contribute

We welcome contributions. If you are interested in this topic, subscribe to our mailing list and join us on Slack.

Current contributors

We will soon list the initial contributors of this effort